This article is intended to general audience who are interested to explore more on AWS Cost Management Practices from DevOps standpoint. Lets get started…

Nowadays, DevOps became a common term in every team/organization. DevOps is still relatively a new term for some of the legacy companies and waiting their turn to explore more. As many of you don’t know, DevOps is not a practice like any other software engineering (or) management but rather a mindset of the team to have a standard workflows with relevant automation to deliver products & services at high velocity.

Most of the functional DevOps teams are leveraging Cloud Providers to enhance their pipelines. Even though DevOps teams are actively working on Cloud native services, there is still a huge gap in maintaining a cost-effective strategies to get their pipelines working. Few aspects like effective billing management, cost control approaches, Total Cost of Ownership (TCO) make teams to drive an effective mechanism to monitor and control costs. The approaches also empowers teams to think about cost-effective strategies while architecting a solution on Cloud platforms.

A few actions are discussed below to provide a decent understanding on utilizing DevOps strategies for AWS Cost Management.

User Management & Ownership

It’s always recommended to configure User and Role based permissions to a very granular level possible. Also, leverage the use of Service Control Policies (SCPs) when you are managing permissions within your organization. There are many other ways of pre-approving & authenticating users via a third-party public identity service providers (or) OpenID Connect.

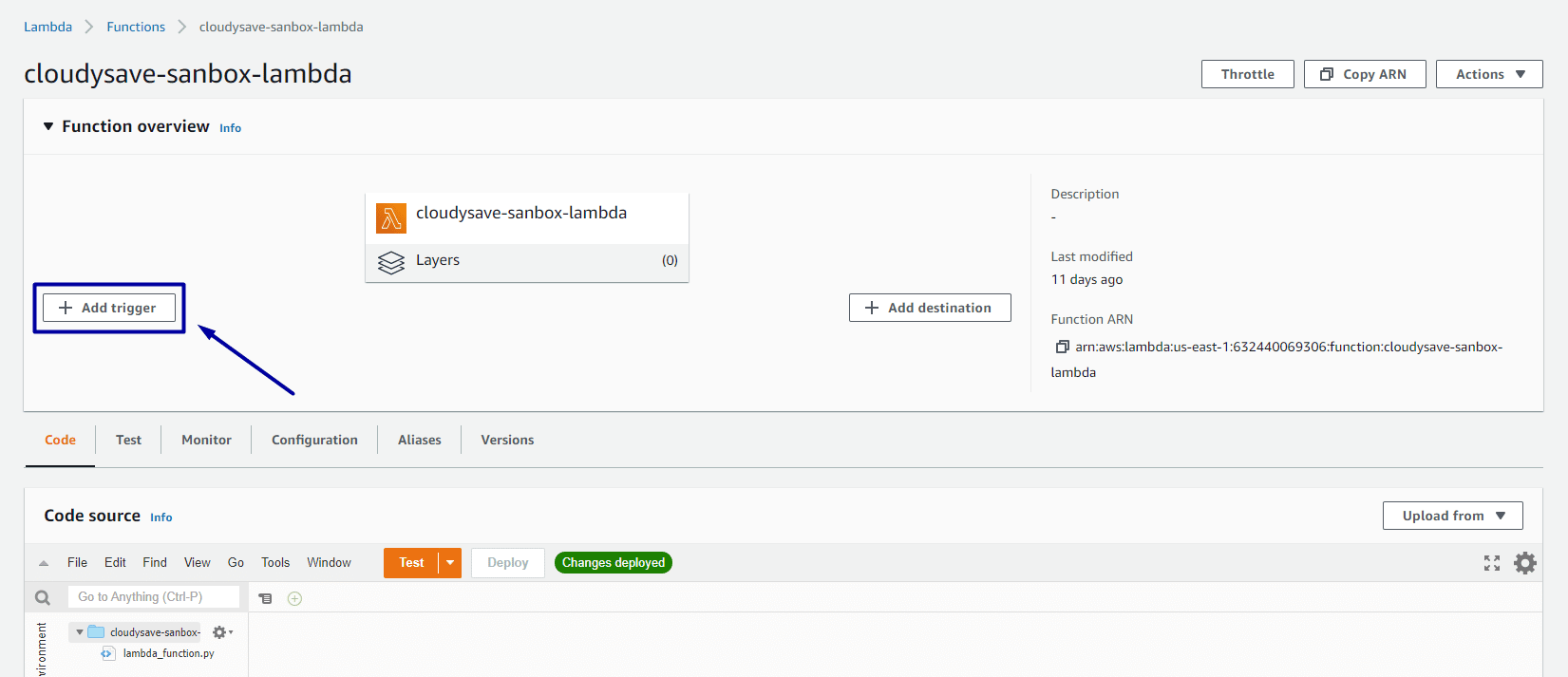

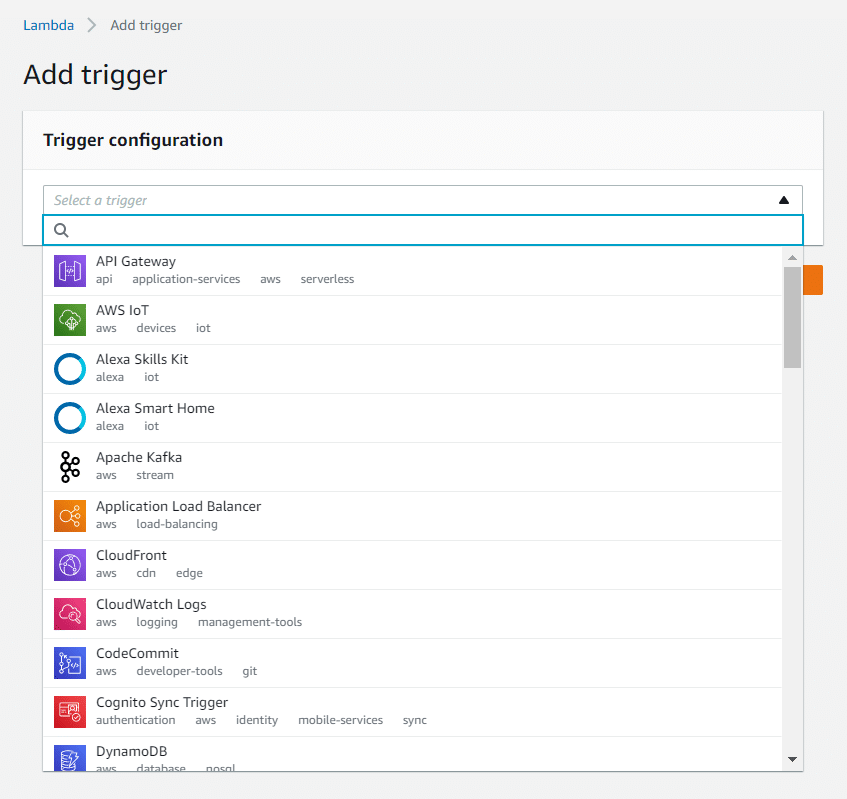

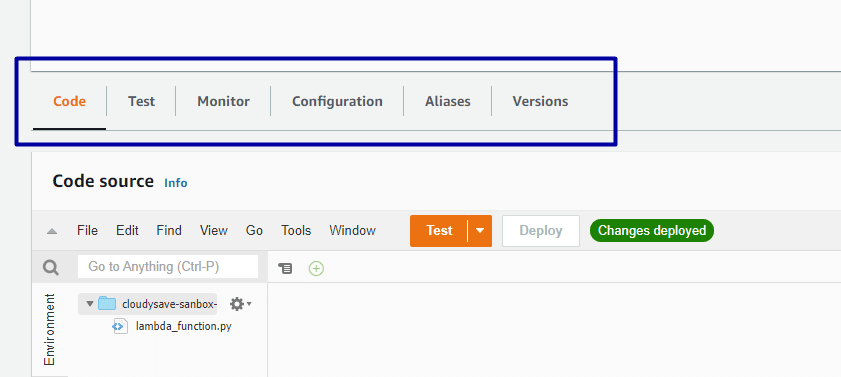

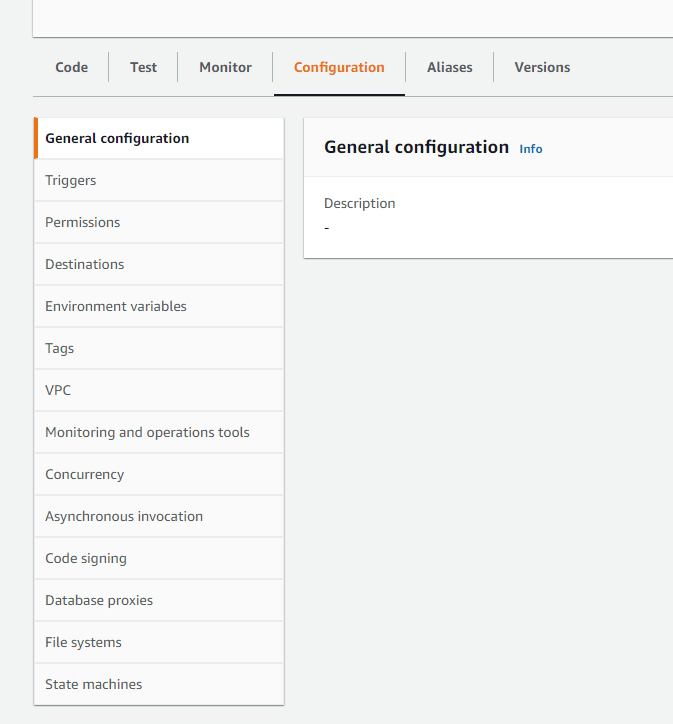

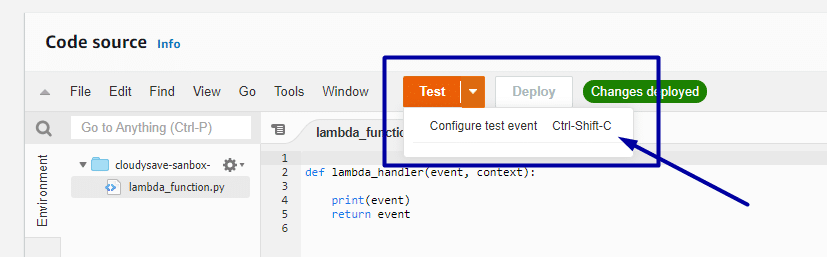

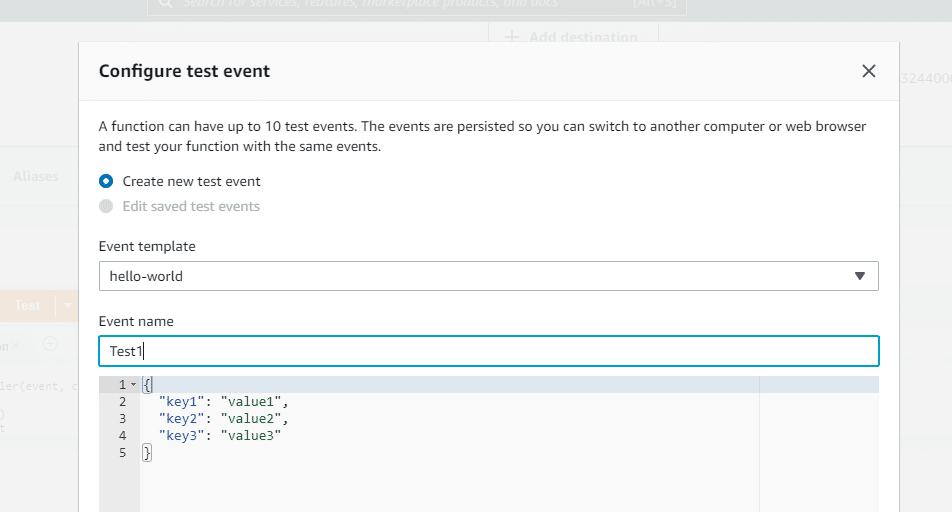

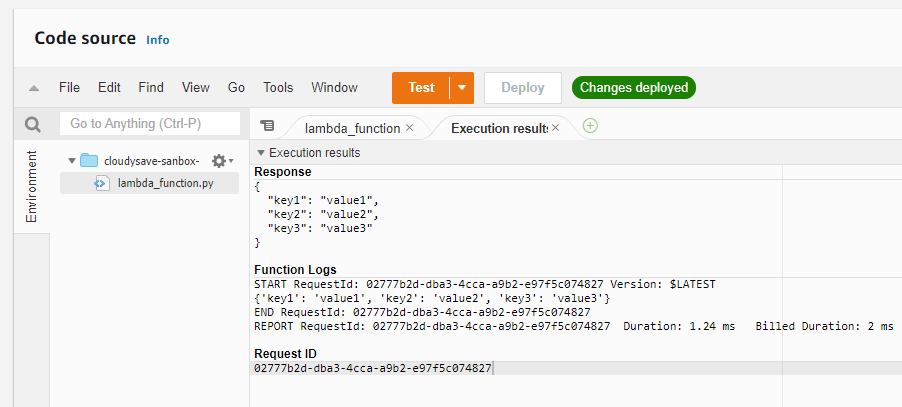

Most of the companies doesn’t have any team working on spend monitoring and cost analysis. It’s a good standard to devote a small team to monitor cost based anomalies per service and timely. Also, make sure the team also oversees the resource-ownership which can help to streamline the overall spends. These team can set up a lambda invocations to automatically remediate if there is a potential anomaly detected.

Tagging and Monitoring Process

AWS Tagging is essential not only to identify environments, resources etc. but also helps to drive financial management decisions based on existing resources and utilized services. Tagging should be mandated across every environment as most of the DevOps workflows/pipelines are derived on top of resource tagging. Improper tagging leads to unnecessary costs which are hard to catch and sometimes need manual intervention to remediate those.

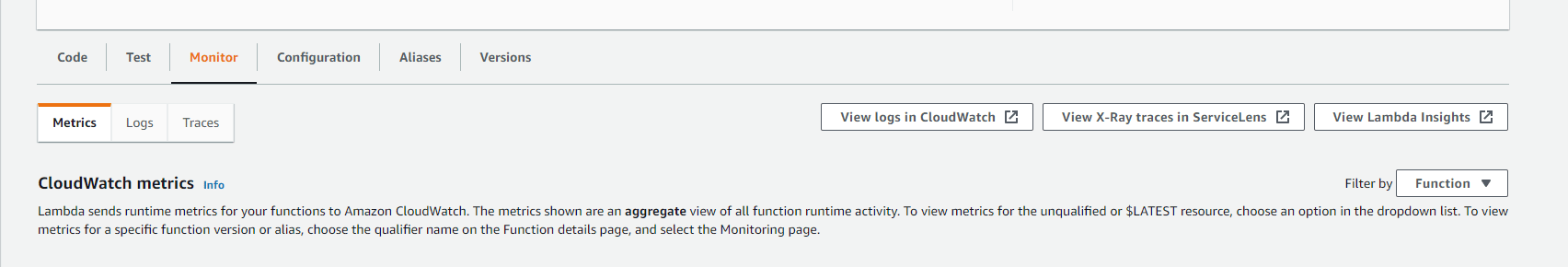

Infrastructure monitoring is also a key thing in driving effective strategies to reduce costs as required. This can be costly at times but with the latest AWS services, this process is relatively cheap and efficient. Also extracting this data and plotting visual patterns will greatly assists the team to understand the non-standard behaviors. Make sure to include alerts on your budget thresholds, etc.

Thresholds based Alerting

Monitoring & Alerting are two faces of the same coin. No matter how granular and automated motoring setup is created. It’s the alerts that are icing on the cake. Thresholds can help to quantify the expectations and it’s greatly suggested to create thresholds based on previous data-points. In terms of cost management, AWS provides budget level thresholds with service, tags, linked-accounts, region level granularity.

If thresholds are breached, automatically remediate the resources if possible. Also, alert the required ops team etc. with the respective breach to make sure the team is on the same page with automation. Sometimes manual approvals are also required to remediate the critical resources.

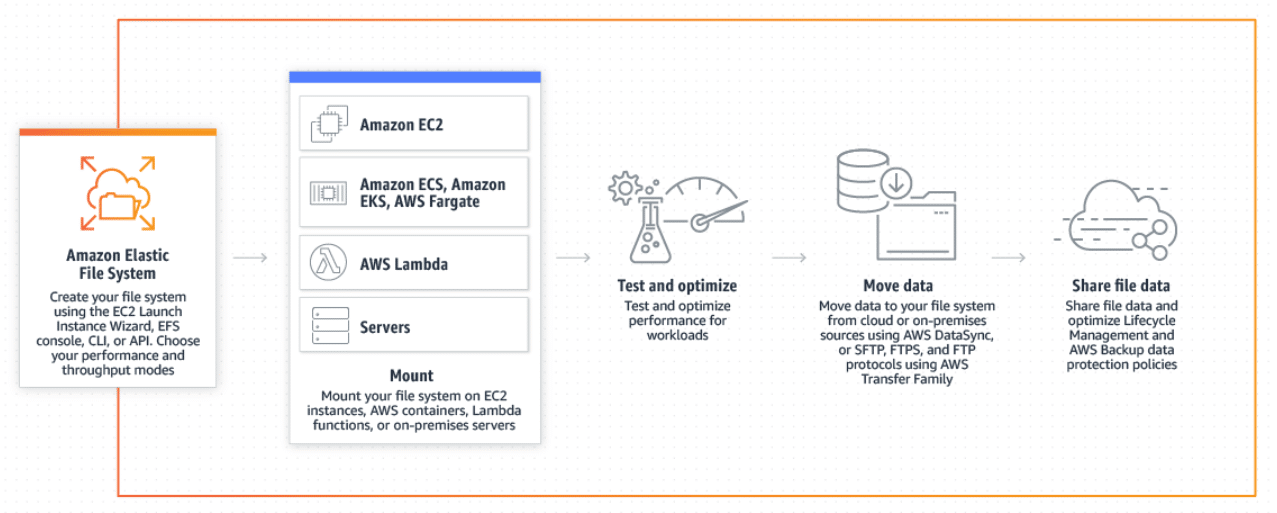

Programmatic Purchase Options

Everyone want to save their infrastructure costs. The best way to do that in cloud was to audit and inspect the infrastructure and include the saving plans if possible. This is a continuous improvement strategy and it’s is better recommended to automate the purchase options automatically. The above aspects also play a key role like identifying the savings opportunities based on thresholds.

Choosing effective and long-term saving-plan is also key thing. What is the best possible reserved instances that can be chosen for Compute, RDS, Elasticsearch etc. needs to be determined by creating a simple custom based data-lake solution. Automating these things can be a great benefit in the long-term for both org and operations team.

These are only a few aspects to be implemented to have a decent strategy for a start. Also, we will provide more articles in the near future to improve your AWS cloud posture wrt. cost management. Meanwhile, have a look at few other articles on AWS cost management.

Cloud Cost Analysis

AWS Cloud Migration Cost Estimation

AWS Cost Models

Service Now Devops Pricing

- CloudySave is an all-round one stop-shop for your organization & teams to reduce your AWS Cloud Costs by more than 55%.

- Cloudysave’s goal is to provide clear visibility about the spending and usage patterns to your Engineers and Ops teams.

- Have a quick look at CloudySave’s Cost Caluculator to estimate real-time AWS costs.

- Sign up Now and uncover instant savings opportunities.