This article provides a detailed overview of invoking AWS Lambda using S3 events, and also highlights a few of the use cases in general.

What are Events in S3?

AWS launched a feature to receive notifications when something happens in your S3 bucket. You can enable these notifications by adding an event configuration that analyzes the event and then can perform certain actions wrt. that event.

We will use an example with a simple PUT based event and trigger an AWS Lambda function. Let’s get started…

Invoking Lambda using S3 Events

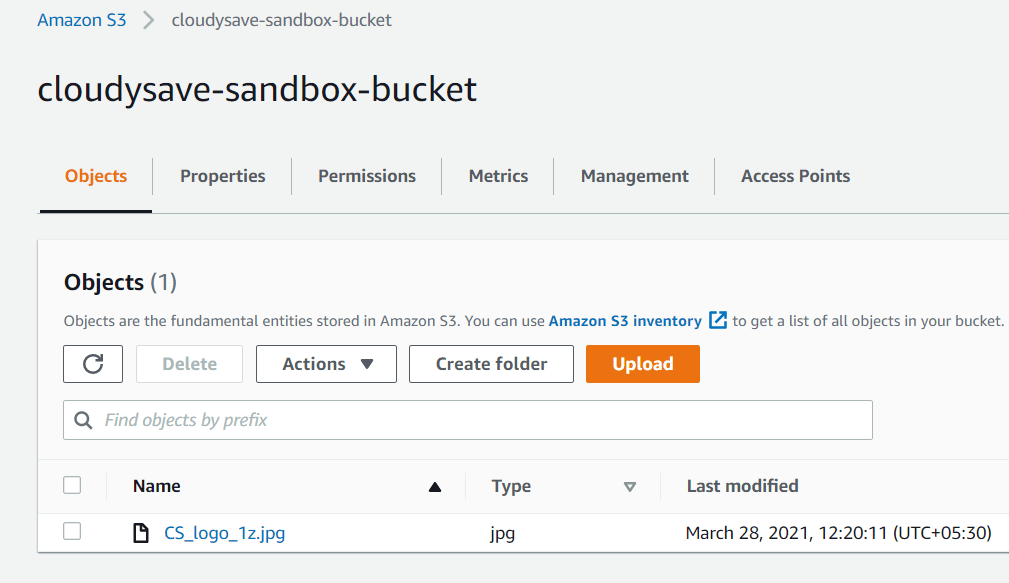

- Create a new S3 bucket (or) navigate to one of the existing S3 buckets.

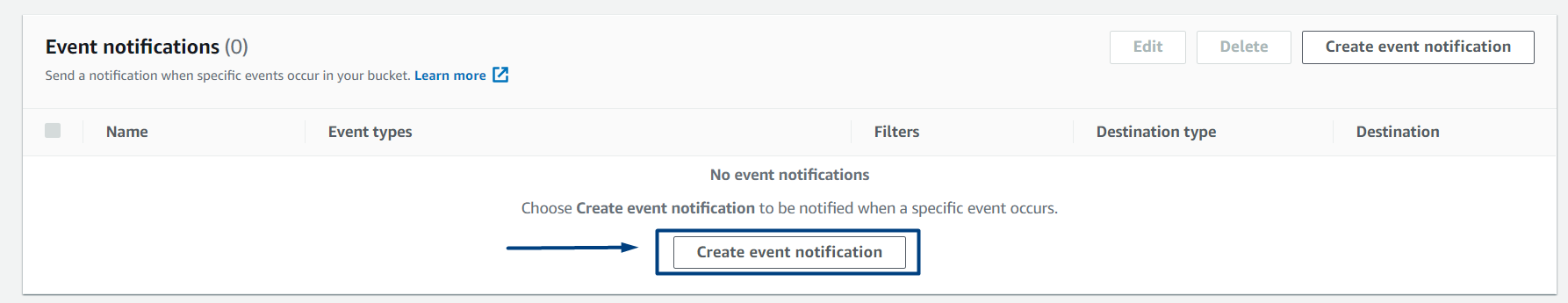

- Navigate to Properties Tab >> Event Notifications. You can enable/create new event-based notifications here.

- Click on Create new notifications.

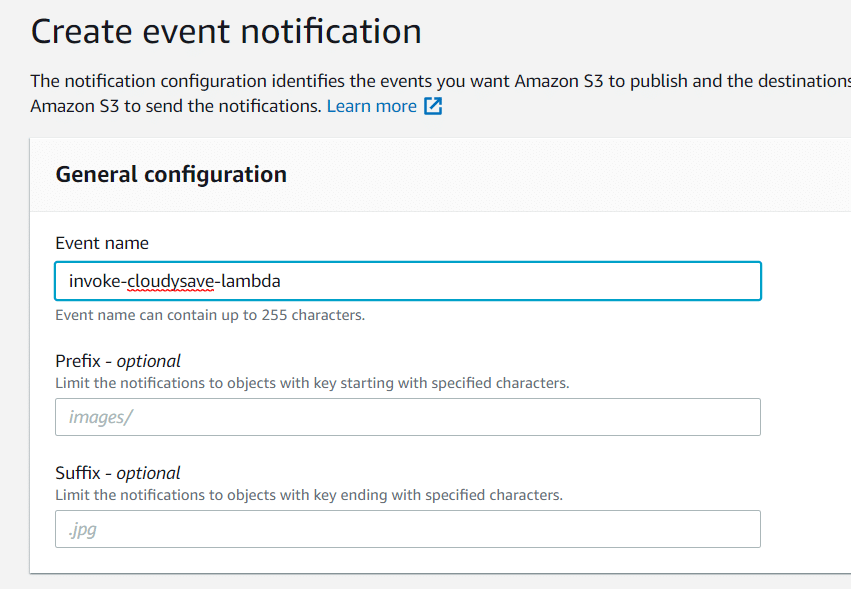

- Provide a name for the event to be created. As of now, we are not using any prefix(but you can provide one which can only considers events added or deleted after that prefix)

- Also, provide an extension if you need one, We are not using anything, that means, the events are processed to all the objects.

- Select the event types, We are checking All object create events. You can select other events if necessary.

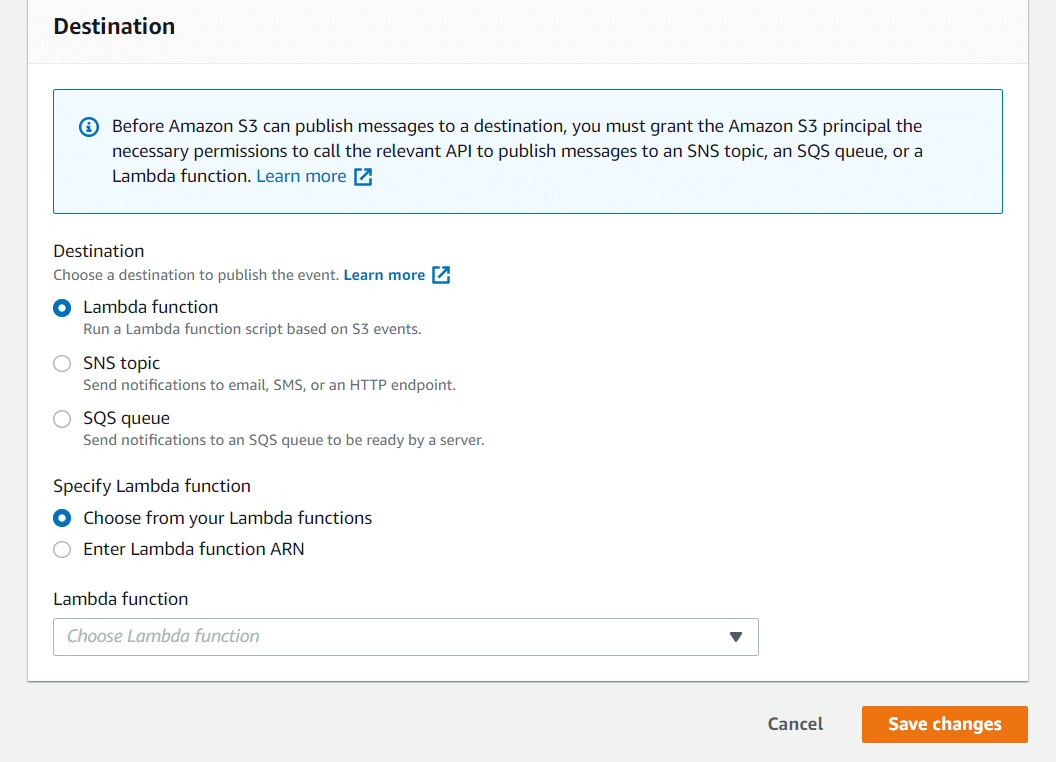

- Chose Lambda function as the destination. There are options to use SNS Topic & SQS queue(We will discuss about this in different post)

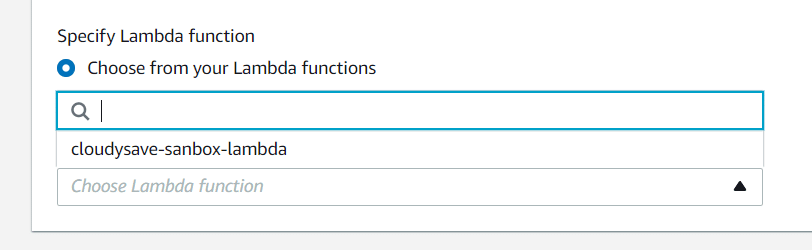

- Now the moment of truth, You can choose from your lambda functions or specify the Lambda function ARN.

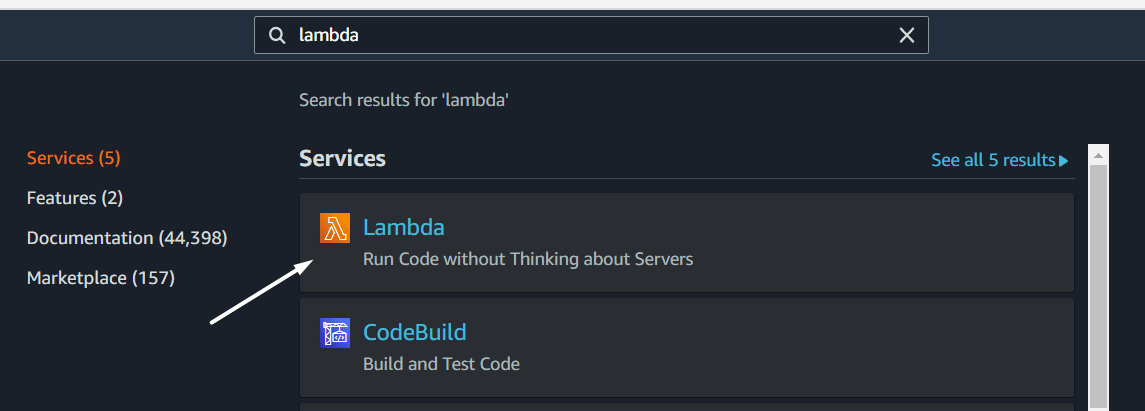

- If you didn’t create any Lambda function, no worries; open the Lambda service in a new tab.

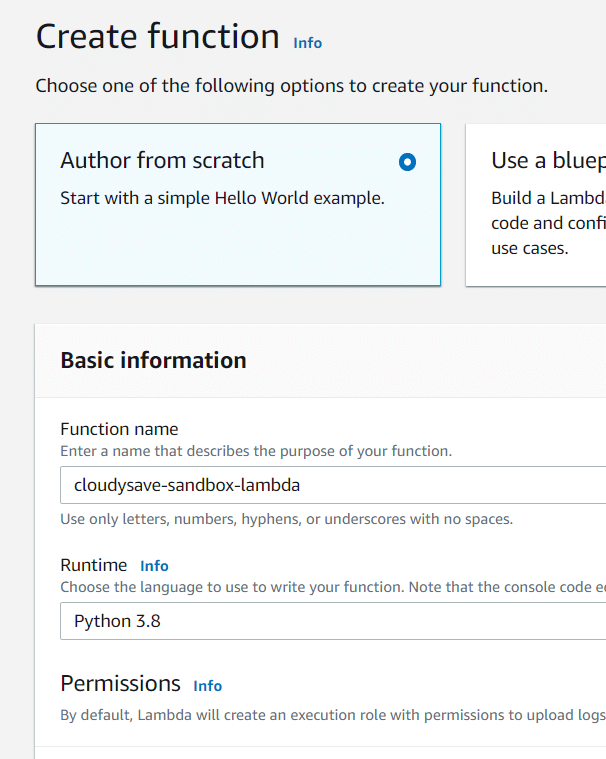

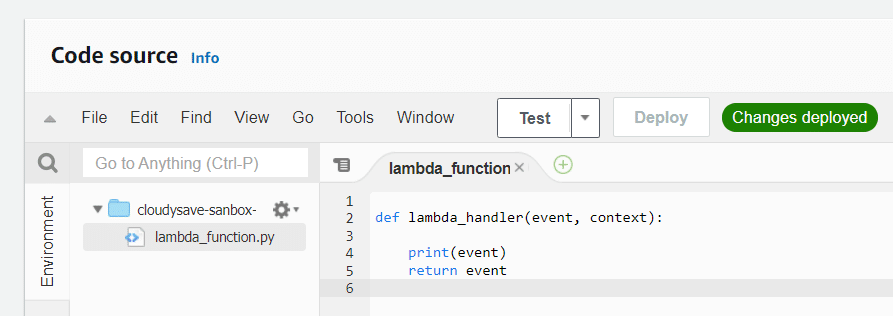

- Create a new function (We are using Python 3.8 as runtime, Select all default configurations & then create lambda function)

- The python code described below just prints & returns the event. Nothing fancy here!

- Update your Lambda handler code to include the following snippet.

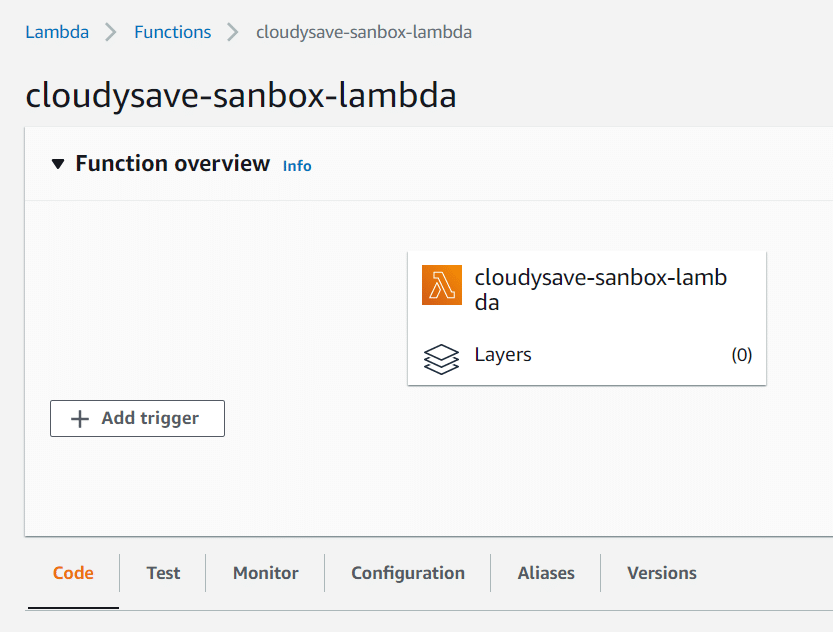

- Again back to the S3 console and you can find the newly created Lambda function over there (or) you can copy the newly created lambda’s ARN.

- Voila, You’ve set all the things. Now let’s test the waters.

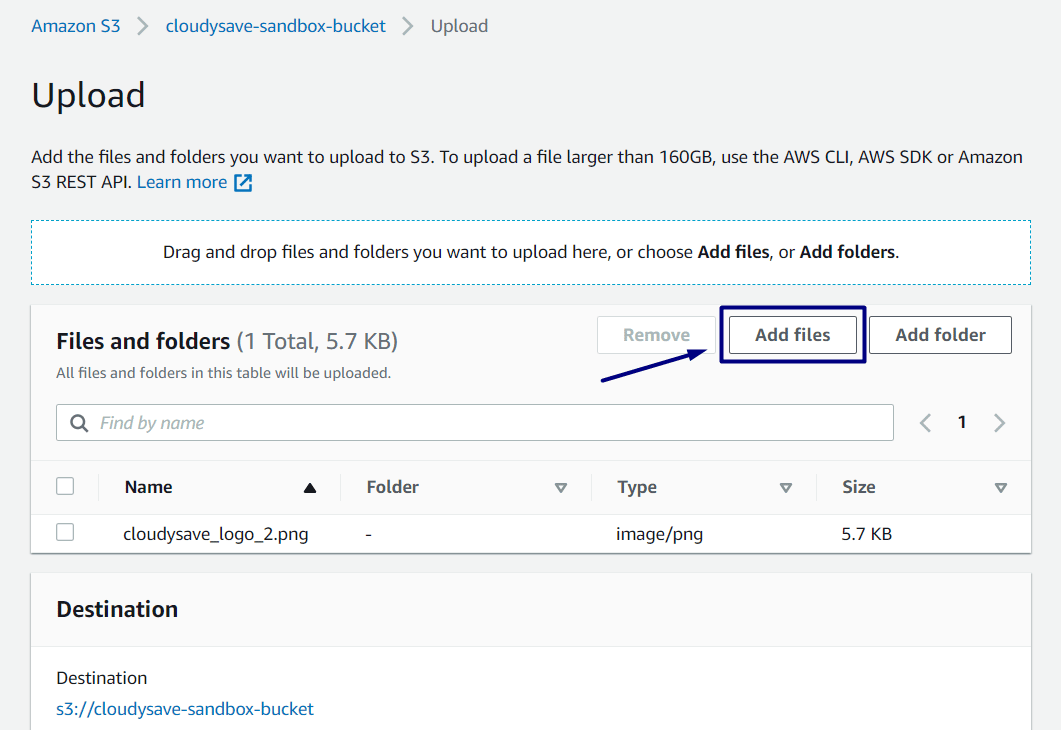

- Upload a file to your bucket.

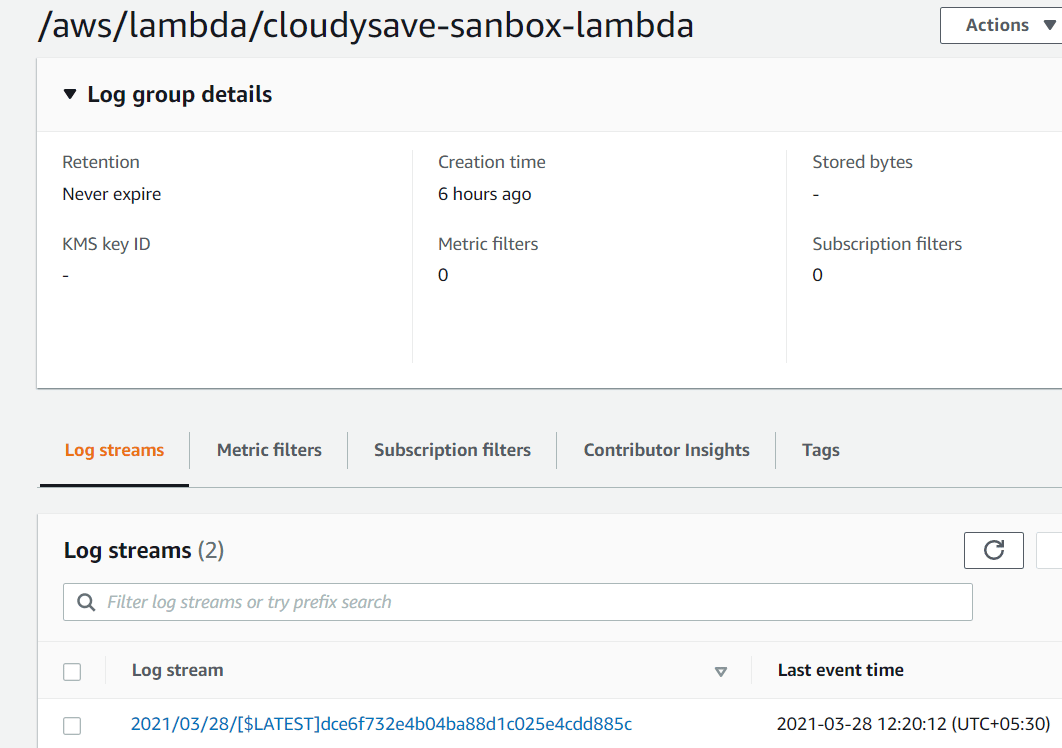

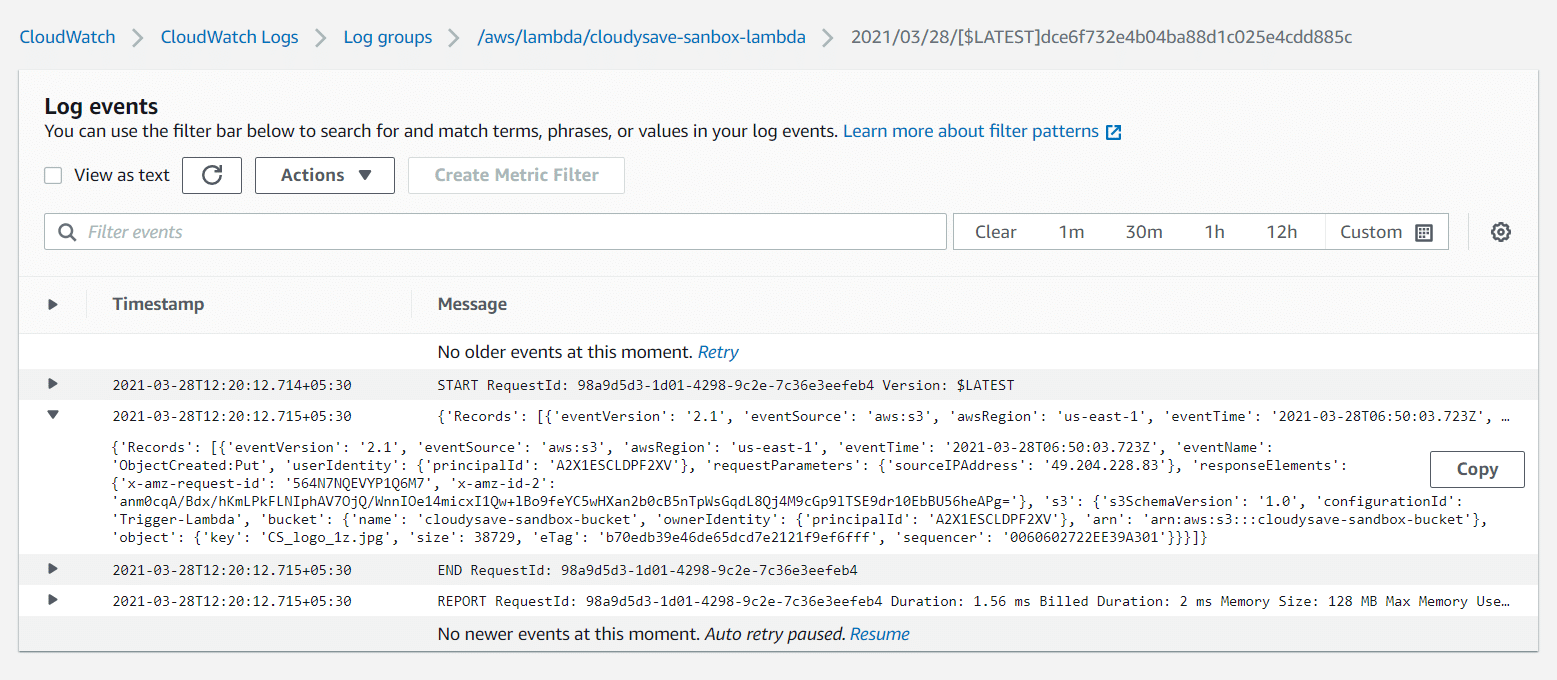

- Go back to the Lambda function console. Navigate to the monitor tab >> View Logs in CloudWatch. You can see a Log stream over there with the name of your Lambda function.

- Open the log stream, and you can see output populated over there.

- Expanding the JSON data, we can see some key things over here. We can see all the data about the object that is being uploaded to the S3 bucket. This is just a starting point & can be further enhanced to multiple use cases.

{ 'Records': [{ 'eventVersion': '2.1', 'awsRegion': 'us-east-1', 'eventTime': '2021-03-28T06:50:03.723Z', 'eventName': 'ObjectCreated:Put', 'userIdentity': { 'principalId': 'A2X1ESCLDPF2XV' }, 'requestParameters': { 'sourceIPAddress': '49.204.228.83' }, 'responseElements': { 'x-amz-request-id': '564N7NQEVYP1Q6M7', 'x-amz-id-2': 'anm0cqA/Bdx/hKmLPkFLNIphAV7OjQ/WnnIOe14micxI1Qw+lBo9feYC5wHXan2b0cB5nTpWsGqdL8Qj4M9cGp9lTSE9dr10EbBU56heAPg=' }, 's3': { 's3SchemaVersion': '1.0', 'configurationId': 'Trigger-Lambda', 'bucket': { 'name': 'cloudysave-sandbox-bucket', 'ownerIdentity': { 'principalId': 'A2X1ESCLDPF2XV' }, 'arn': 'arn:aws:s3:::cloudysave-sandbox-bucket' }, 'object': { 'key': 'CS_logo_1z.jpg', 'size': 38729, 'eTag': 'b70edb39e46de65dcd7e2121f9ef6fff', 'sequencer': '0060602722EE39A301' } } }] }

Additional Details

- Normally, S3 events are invoking Lambda function & the event message acts as the argument.

- If S3 notifications for Lambda functions are set via the console, the console will configure the necessary permissions on Lambda to get invoked by S3 bucket.

- As per AWS, S3 event notifications take around a few seconds to a minute to get delivered.

- AWS guarantees delivery of the event al-least once. There are very few to no occurrences of events getting delivered twice.

- Only a single event is delivered if multiple writes are made at the same time to a single non-versioned object.

- It’s recommended to enable versioning to avoid the above scenario as versioning creates unique writes for the object and event notifications will be sent accordingly.

Final Thoughts

This article provides basic details about how to get started with S3 event notifications and triggering lambda. This workflow can be used for multiple use cases like automation, mobile & serverless apps, etc. These event triggers can greatly influence the automation on top of AWS as well. The flexibility of S3 & support of diverse programming languages on Lambda can be the best fit for driving out diverse automation scenarios on top of AWS. Make sure to play around with S3 event notifications. We will discuss more S3 events in upcoming blog posts. If you are new to AWS Lambda check out how AWS Lambda Invoke works.

Here are few awesome resources on AWS Services:

AWS S3 Bucket Details

AWS S3 Bucket Versioning

AWS S3 Life-Cycle Management

AWS S3 File Explorer

Create AWS S3 Access Keys

AWS S3 Bucket Costs

AWS S3 Custom Key Store

- CloudySave is an all-round one-stop-shop for your organization & teams to reduce your AWS Cloud Costs by more than 55%.

- Cloudysave’s goal is to provide clear visibility about the spending and usage patterns to your Engineers and Ops teams.

- Have a quick look at CloudySave’s Cost Calculator to estimate real-time AWS costs.