Amazon Web Services is a broadly adopted cloud platform with over 175 cloud-based services for businesses of all types. APN partner works as the global partner program for technology and consulting businesses to find out the most reliable solutions to their needs.

It’s a one-stop platform for all types of web-based businesses to develop, market and sell AWS solutions by offering top-notch supports and services as per their requirements.

At present, there are tens of thousands of APN Partners across the globe with more than 90% of fortune 100 companies and the majority of fortune 500 companies utilize APN Partners solutions and services to see the desired growth in their respective businesses.

Data & Analytics APN Partners

The main aim behind working with the APN Partners is to shift up your business with effective migration to cloud platform. You can easily find out suitable APN Partners by using the AWS Partner Solutions Finder tool. This tool is designed for the customers to search and connect with the trusted and reliable APN Partners as per their needs. AWS Customer can make use of the AWS Partner Solutions Finder to find out the suitable APN Partner for handling workloads on the AWS platform.

Basically, there are three types of categories available to find out the suitable APN Partners:

- Industry

- AWS Product

- Use Case

In this article, we will discuss some of the most trusted APN Partners based on Data & Analytics Use Cases on the AWS Platform:

1. Cloudreach

Cloudreach is a leading cloud service provider in the world headquarter in London. The company is known for providing top-notch services to businesses to monetize their business data and to see the desired growth with it. They offer a variety of services through their application, software and cloud-based services which includes all the industries and businesses.

Cloudreach is an original partner of AWS environment that lets you find the right solution for your business requirements. You will be provided with proper guidelines to find out the suitable AWS solutions which can help elevate your business. Cloudreach was named 2019 UK & I APN Consulting Partner of the year.

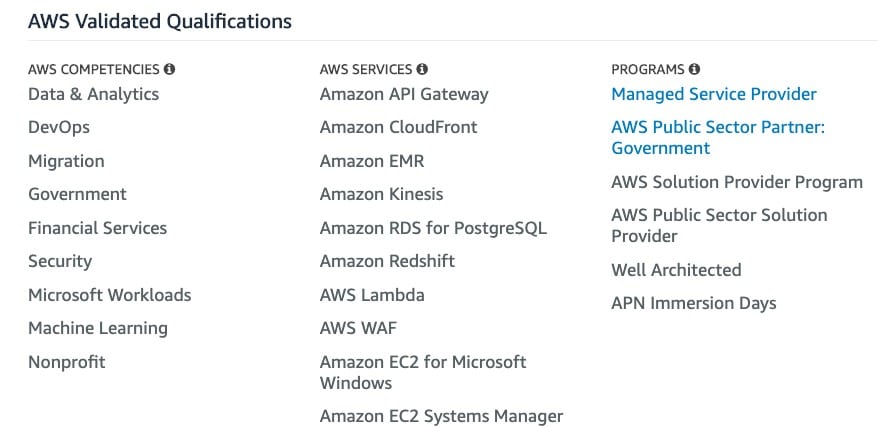

AWS Data and Analytics Use Case – Cloudreach

2. Deloitte

When it comes to the digital transformation of your business, Deloitte comes first. It is an industry leader and a trusted name for providing professional services and solutions to businesses of all kinds. The company is headquartered in London and is functional in many countries across the globe.

The company has over 244,000 professionals and specialists to simplify major issues of your businesses and convert them solutions by deep analysis. The market-leading team helps businesses and industries to see the ray of hope in the new digital era.

If you are a casual learner, then you can also find out the reliable sources through Deloitte’s network to get started with a new career. They offer a variety of services which are there not only for the enterprises but for casual users also.

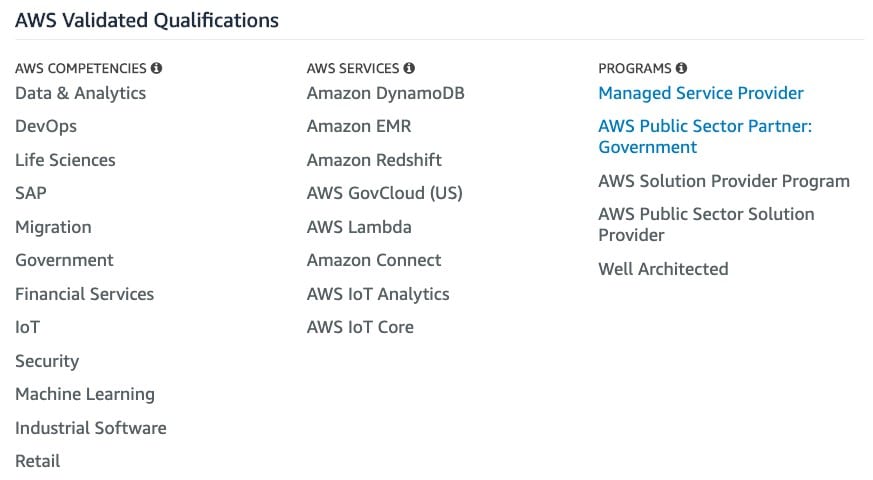

AWS Data and Analytics Use Case – Deloitte

3. Storm Reply

Storm Reply is known for providing solutions to businesses and industries through effective designing and development strategies. The company holds Amazon Premier Consulting Partner Status to help significant customers run their applications and businesses on the AWS Platform. The company provides solutions through modern communication networks and digital media.

With great experience in Cloud SaaS, IaaS and PaaS architecture, the company provides end-to-end services to its customers with full satisfaction. Cloud Reply supports important companies in Europe and across the world for the implementations of cloud-based systems and applications.

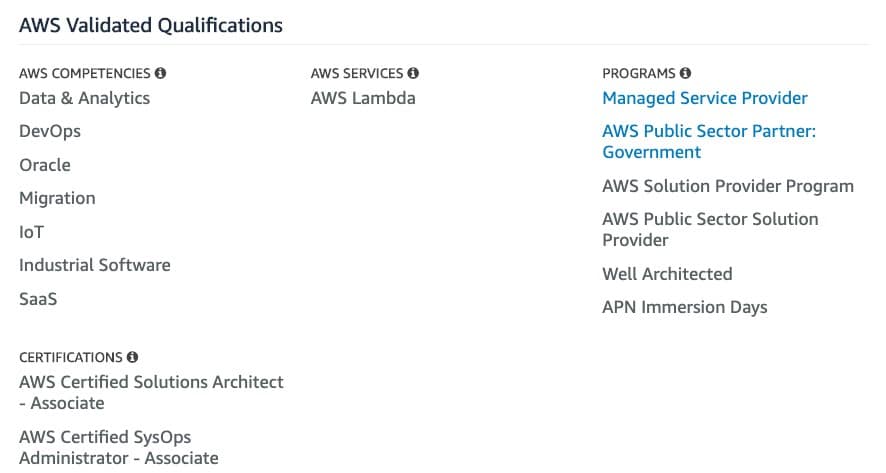

AWS Data and Analytics Use Case – Storm Reply

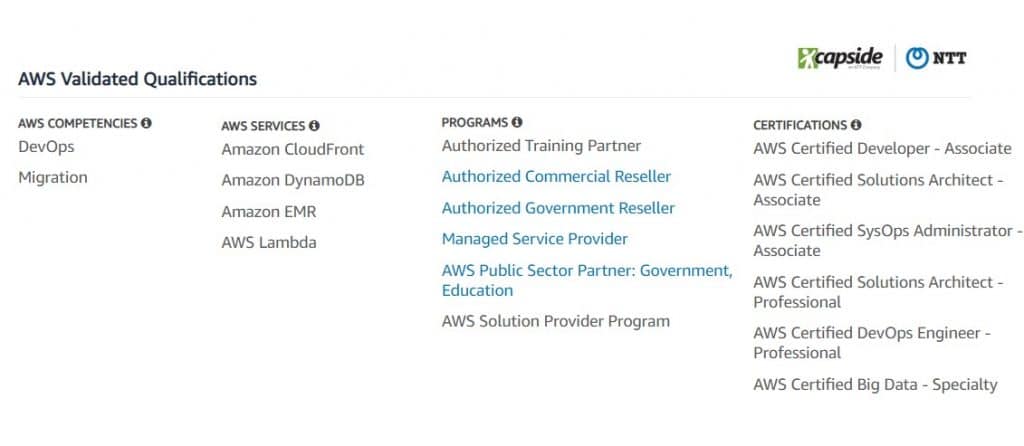

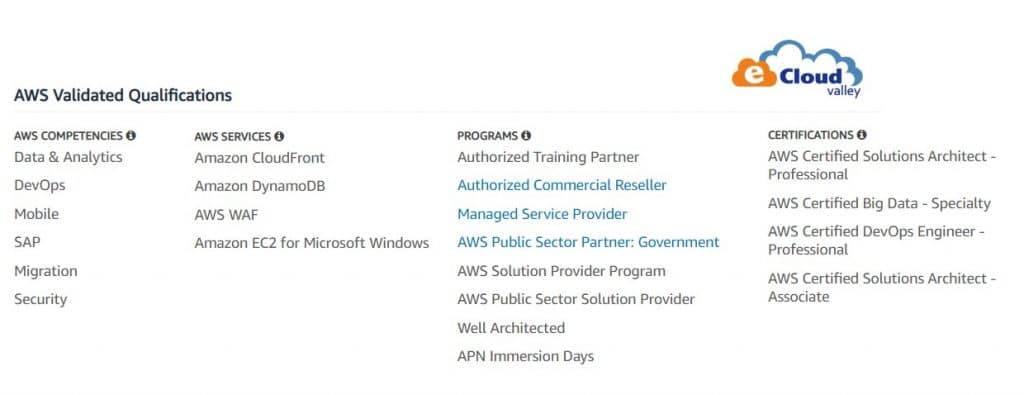

4. Ecloudvalley

Ecloudvalley is the leading AWS Premium Consulting Partner to evolve businesses in the cloud by providing effective solutions. The company is one of the most trusted cloud advisors on the AWS Platform with over 400+ certificates. With the team of highly qualified and experienced specialists, they deliver the best of solutions to convert your physical business to cloud-based one.

Ecloudvallye is known for providing services like cloud training, cloud migration, data solution, next-generation MSP and automated cloud management platform. They have served over 1000+ customers in the region and planning to expand their services across the globe in the coming months.

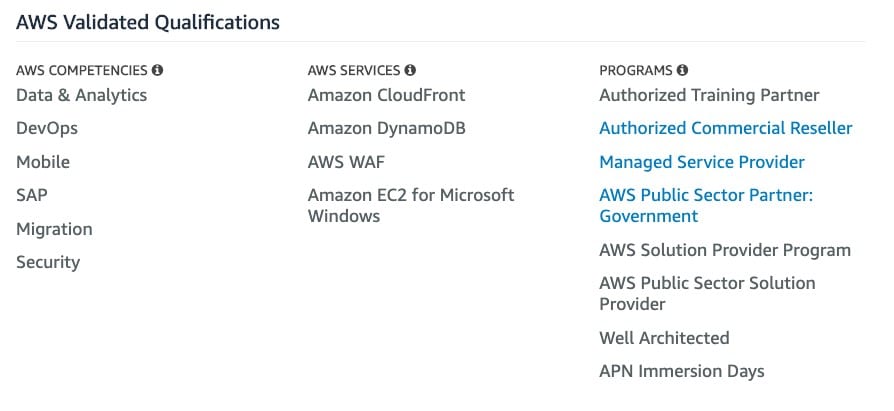

AWS Data and Analytics Use Case – EcloudValley

5. Wipro

Wipro is a trusted name in the technology world as it is a global company for providing technology, consulting and business process services for significant customers across the globe. The company is headquartered in Bangalore, India and offering cloud-based services to customers from across the globe.

The company aims at delivering top-notch services by combining different technologies including robotics, cloud analytics, hyper-automation and more to help their clients adopt these technologies to make their businesses functional on the digital platform.

Wipro is a leading APN Partner focuses on providing a complete AWS cloud service through Cloud services, Cybersecurity and Data Analysis. You can explore the range of solutions and pick the most reliable one that suits your business needs.

AWS Data and Analytics Use Case – Wipro