Amazon S3 Cost Optimization

How to Optimize Costs for S3?

Three major costs associated with S3:

| Storage cost | Charged per GB / month. ~ $0.03 / GB / month, charged hourly |

| API cost for operation of files | ~$0.005 / 10000 read requests, write requests are 10 times more expensive |

| Data transfer outside of AWS region | ~$0.02 / GB to different AWS region, ~$0.06 / GB to the internet. |

Prices differ based on volume and region, but optimization techniques remain unchanged.

What are the Basics of S3 Costs?

Choose the right AWS region for S3 bucket.

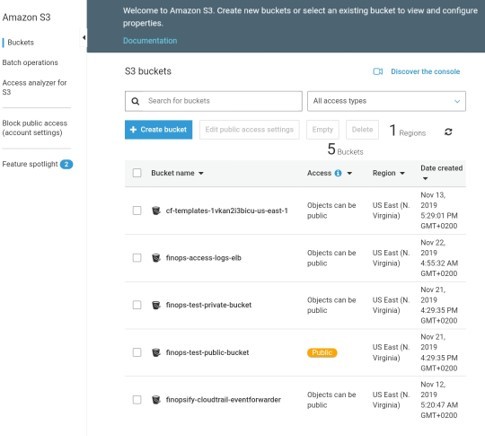

s3 cost optimization

- Free data transfer between EC2 and S3 of the same region.

- Downloading from another region costs $0.02 per GB.

- Select the right naming schema.

- Never share Amazon S3 credentials and always monitor credential usage.

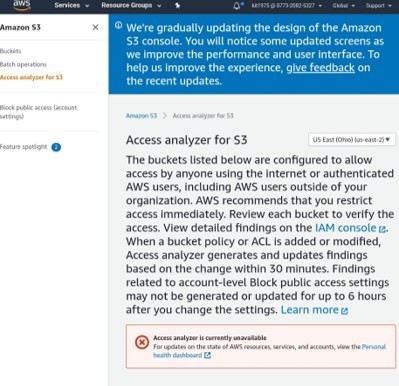

S3 Cost Optimization – Access Analyzer

- Rely on temporary credentials that can be revoked.

- Keep track of access keys and credential usage regularly to avoid problems.

- Don’t begin with Amazon Glacier directly.

- Stay simple.

- Only start with infrequent access storage class when you don’t want to read objects.

How should You Analyze Your S3 Bill?

1. Review aggregated AWS S3 spend from AWS Console.

2. To get increased granular per bucket view, allow cost explorer or reporting to S3 bucket.

Cost explorer is the simplest, to begin with.

You get more flexibility by downloading data from “S3 reports” to spreadsheets.

After reaching a certain scale, using dedicated cost monitoring SaaS like CloudHealth becomes your best bet.

AWS bill is updated every day for storage charges, even when S3 storage is charged hourly.

You have the option of enabling S3 Access Log that gives entry for each API access. This access log can quickly grow and cost a lot for storing.

All objects could be listed using API, by writing a new script or using some third-party GUI such as S3 browser.

Cost Optimizations for S3:

1. Save money on storage fees

- Only store files that you really need.

- Delete files after the time when they no longer are relevant.

- Delete objects 7 days after they are created.

- Delete unused files that have the option to get recreated.

- Same image in many resolutions for thumbnails/galleries that are accessed rarely.

2. Use “lifecycle” feature to delete old versions.

- Delete or overwrite in S3 versioned bucket, because if you keep data forever you are going to have to keep paying for it forever.

- Clean up any multipart uploads that are not complete.

3. Compress Your Data Before its Sent to S3

- Rely on fast compression-like LZ4, which will give better performance and reduce your storage requirement and cost.

- Trade CPU time for better network IO and less spending on S3.

4. Data Format Matters in Big Data Apps

- Using better data structures can have an enormous impact on your application performance and storage size. The biggest changes:

- Use binary format vs. human-readable format. When storing many numbers, binary format like AVRO has the ability to store bigger numbers while having lesser storage as compared to JSON.

- Using row-based vs. column-based storage. Use columnar-based storage for analytics batch processing because it will provide better compression and storage optimization.

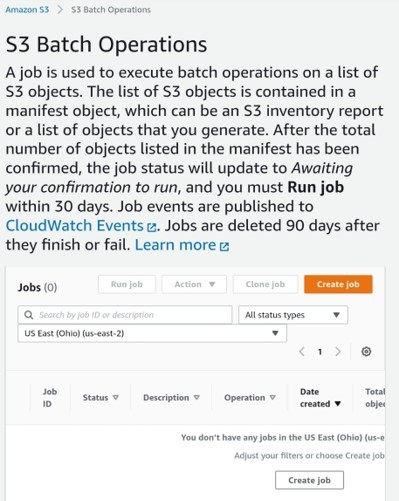

S3 Cost Optimization – Batch Operations

- Bloom filter can reduce the need to access some files. A number of indexes may waste storage and grant little performance gain.

5. Use Infrequent Access Storage Class

- This class can provide the same API and performance as that of the regular S3 storage.

- It’s about four times cheaper than standard storage costing only $0.007 GB/month while standard storage costs $0.03 GB/month, but its problem is that you need to pay for the retrieval a sum of $0.01 GB, while Retrieval is for no charge on standard storage class.

When you aim to download objects less than two times a month, you will be saving money by relying on IA.

See Also