AWS Lambda Response Size Limit and Deployment Packages

AWS Lambda Response Size Limit

While trying to work with Lambda functions you will constantly worry about the allowed size of deployment packages.

First, we need to take a look at the Lambda deployment limits and then start addressing the 50 MB package size in the official documentation of AWS. It is a delusive process since you can create larger deployments of files that are uncompressed.

Inefficiently implemented lambda functions will cost you more than you anticipate.

Ask these questions first:

- How much do you currently spend on each Lambda function?

- How much will you spend on your next deployment?

- What increases the cost of your lambda functions?

You can get instant answers to all 3 questions above with our advanced and no-cost AWS Saving Report.

AWS Lambda Includes the Below Listed Response Size Limitations:

Limitations of Runtime Environment:

Disk space: limited to 512 MB.

Default deployment package size: 50 MB.

Memory range: 128 to 1536 MB.

Max execution timeout of function: 15 minutes.

Limitations for Requests by Lambda: Request & Response body payload size: maximized to 6 MB.

Event request body: up to 128 KB.

The reason behind placing a limit of 50 MB is for you not to upload your deployment package to lambda immediately with a size that is greater than the placed limit. It’s possible for the limit to be very much higher one you allow your lambda function to pull deployment package from S3.

The AWS S3 provide the ability to deploy function code with a significantly higher deployment package limit in comparison with immediately uploading to Lambda or other AWS services.

Deployment Packages:

Let’s take a Machine Learning model for a deployment package, and create some random data having a specific size so that we get to test the limit using differing sizes. The below limits shall be tested:

– 50 MB: Max deployment package size

– 250 MB: Size of code/dependencies which may be zipped into your deployment package — uncompressed .zip/.jar size

Testing:

Let’s go through the main steps for directly uploading your package to a lambda function:

First, we need to zip the package. The zipped package is going to include all the files we need like:

- classify_image.py

- classify_image_graph_def.pb

- MachineLearning-Bundle.zip

Name the package as TestingPackage.zip.

zip TestingPackage.zip TestingPackage

Then check if it’s possible to compress the file or not.

$ ls -lhtr | grep zip-rw-r--r-- 1 john staff 123M Nov 4 13:05 TestingPackage.zip

Also, upon the compressing and zipping of the file, the overall package is still having a size of about 132 MB.

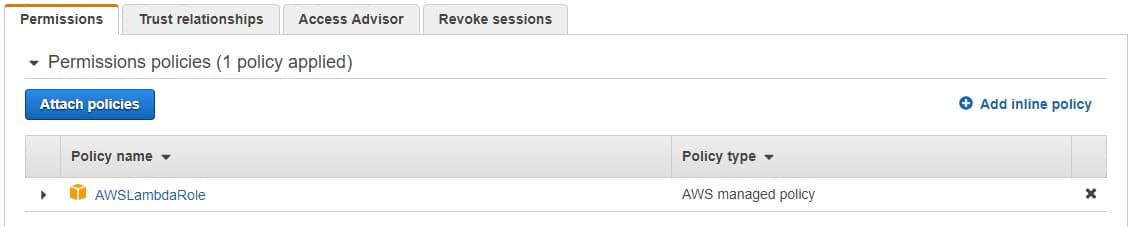

For creating a lambda function, an IAM role must be created. Let’s skip the steps for creating the role. Start by logging in to IAM Management Console and creating a Test-role then attaching the AWSLambdaRole policy.

AWS Lambda Response Size Limit – policies

Then, you need to create a lambda function using the CLI and uploading the deployment package immediately to the function.

aws lambda create-function --function-name mlearn-test --runtime nodejs6.10 --role arn:aws:iam::XXXXXXXXXXXX:role/Test-role --handler tensorml --region ap-south-1 --zip-file fileb://./TestingPackage.zip

Change the XXXXXXXXXXXX and add your AWS Account id. Because the package size is larger than 50 MB, which is the limit specified, it will throw an error.

Due to the deployment package being extremely large we need to load it on more time during Lambda inference execution from the Amazon S3. To do so, AWS S3 bucket needs to be created from CLI:aws s3 mb s3://mlearn-test –region ap-south-1

The following shall create an S3 bucket. Then, we must upload this package to the newly created bucket and update the lambda function with an S3 object key.aws s3 cp ./ s3://mlearn-test/ –recursive –exclude “*” –include “TestingPackage.zip”

As soon as the package gets uploaded to the bucket, we must update the lambda function with that package’s object key.aws lambda update-function-code –function-name mlearn-test –region ap-south-1 –s3-bucket mlearn-test –s3-key TestingPackage.zip

Now, no error will be shown even after updating the lambda function, so we can upload the package successfully. Meaning that the package size is capable of being greater than 50 MB when getting uploaded through S3 rather than uploading it directly. Because the package size is about 132 MB even after compression, we are still unable to define the max limit of the package for uploading.

For reaching the max limit, let’s start creating a random data having a size of about 300 MB and then uploading it through S3 and updating the lambda function.fsutil file createnew trial300.txt 350000000

This command shall create a sample file having a size of about 300 MB. Zip your file then go ahead and upload it one more time through S3.aws s3 cp ./ s3://mlearn-test/ –recursive –exclude “*” –include “trial300.zip”aws lambda update-function-code –function-name mlearn-test –region ap-south-1 –s3-bucket mlearn-test –s3-key trial300.zip

Once you get to updating your lambda function you will get the below error:

An error occurred (InvalidParameterValueException) when calling the UpdateFunctionCode operation: Unzipped size must be smaller than 262144000 bytes. This error displays the size of the unzipped package needs to be less than 262144000 bytes [about 262 MB]. As you can see it seems that this size is merely a bit larger than the limit of 250 MB as a size for code/dependences which may get zipped into a deployment package of an uncompressed .zip/.jar size.

After all this we have finally found out that the max limit of the size of uncompressed deployment package is that of 250 MB for uploading through S3. Yet, we may not upload anything other than a 50 MB package as you upload directly into the lambda function.

The most significant thing to keep in mind here is that the code + its dependencies have to stay within a 250 MB size limit when they are in an uncompressed state. Upon considering a larger package size this might as well critically affect the lambda function’s cold starting time. Subsequently, the lambda function shall need a longer period of time for execution with a larger package size. Limits of AWS Lambda puts limits to the amount of compute and storage resources which may be utilized for running and storing functions.

The below limits are applied per-region and may get increased when needed. For requesting an increase, you must utilize the Support Center console.

| Resource | Default Limit |

|---|---|

| Concurrent executions | 1000 |

| Function and layer storage | 75 GB |

| Elastic network interfaces per VPC | 250 |

The below mentioned limits are applied to: deployments + function configuration + execution, and they may not get changed.

| Resource | Limit |

|---|---|

| Function memory allocation | 128 MB to 3,008 MB, in 64 MB increments. |

| Function timeout | 900 seconds (15 minutes) |

| Function environment variables | 4 KB |

| Function resource-based policy | 20 KB |

| Function layers | 5 layers |

| Function burst concurrency | 500 - 3000 (varies per region) |

| Invocation frequency (requests per second) | 10 x concurrent executions limit (synchronous – all sources) 10 x concurrent executions limit (asynchronous – non-AWS sources) Unlimited (asynchronous – AWS service sources) |

| Invocation payload (request and response) | 6 MB (synchronous) 256 KB (asynchronous) |

| Deployment package size | 50 MB (zipped, for direct upload) 250 MB (unzipped, including layers) 3 MB (console editor) |

| Test events (console editor) | 10 |

| /tmp directory storage | 512 MB |

| File descriptors | 1024 |

| Execution processes/threads | 1024 |

AWS Lambda free usage tier has also limits and includes 1M free lambda requests per month and 400,000 GB-seconds of compute time per month, The monthly request price is $0.20 per 1 million request. AWS Lambda cost depends on the duration of the function and total amount of memory used. The lambda cost calculator can give you a clear view of your updated lambda price.