AWS Lambda Scaling

AWS Lambda Scaling

AWS Lambda Scaling

When you get your function invoked for the first time ever, Lambda will create an instance of this function and then run its handler method for the sake of processing the event. As soon as a response is returned by the function, it will remain active and await to take on some extra events. In the case of invoking the function another time as the first event gets processed, Lambda will then initialize one other instance, allowing the function to process both events concurrently. While additional events join, Lambda starts routing them to available instances and creating new instances as per necessity. As soon as value of requests starts decreasing, Lambda will stop any unused instances in order get some scaling capacity freed up for other functions to make use of.

Functions’ concurrency: Number of instances serving requests at a specific period of time.

Initial burst of traffic: Functions’ cumulative concurrency in one specific Region is capable of reaching an initial level falling in between 500 and 3000, and it may vary per selected Region.

Limits for Burst Concurrency

- 3000: US East (N. Virginia), US West (Oregon), Europe (Ireland)

- 1000: Europe (Frankfurt), Asia Pacific (Tokyo)

- 500: Other selected Regions

When the initial burst occurs, a functions’ concurrency is capable of scaling by an extra 500 instances every minute. This remains taking place up until enough instances are found for serving each request, or till a concurrency limit gets reached. If requests arrive way faster than the pace that your function is capable of scaling, or your function reaches max concurrency, those extra requests shall result in failure with showing a throttling error called: 429 status code.

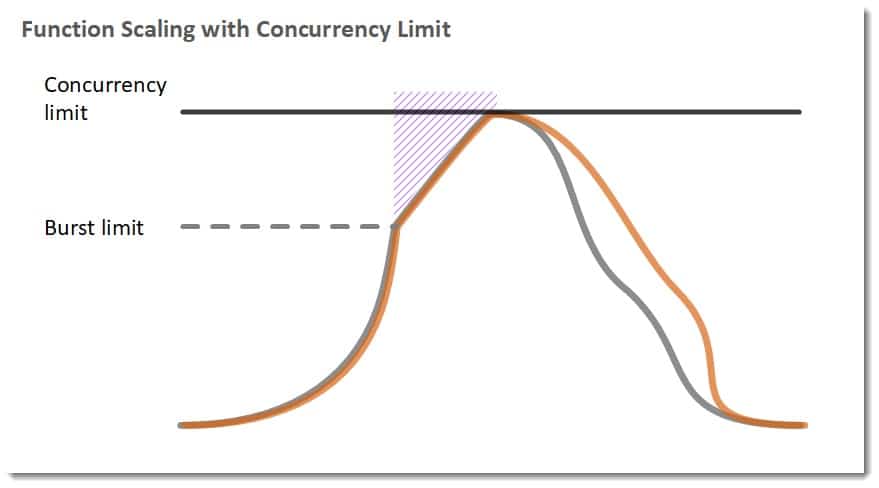

Below is a function processing a spike in the midst of some traffic. With the exponential increase of invocations, the function will start scaling up. It initializes a new instance will be initialized for all requests which may not be routed to available instances. Upon reaching the burst concurrency limit, the function begins scaling linearly. In case this doesn’t make enough concurrency for serving every request, more extra requests will get throttled and must then be retried.

AWS Lambda Scaling – function scaling with concurrency limit

Legend for Graph

The function will keep on scaling up until the account’s concurrency limit for this specific function’s Region gets finally reached. The function will be catching up to demand, causing requests to subside, and any unused instance of the function to stop after they have stayed idle for a period of time. Unused instances get frozen as they wait for requests and they won’t incur extra charges for you to pay.

Regional concurrency limit: starting at 1,000. It may be increased through submitting a request in Support Center console. For the sake of allocating capacity on a per-function basis, it’s possible to get functions configured using reserved concurrency. Reserved concurrency will create a pool which may merely get utilized by its own function, while preventing its function from utilizing any unreserved concurrency.

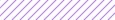

As your function starts scaling up, the first request every instance serves will get affected by the period of time it needs for loading and initializing your code. In case your initialization code requires a long period of time, the effect on average and percentile latency may actually be critical. For allowing your function to start scaling with no fluctuations in latency, you will need to rely on provisioned concurrency. Below is a function having its provisioned concurrency working to process a spike in traffic.

AWS Lambda Scaling – function scaling with provisioned concurrency

Legend of Graph

As soon as provisioned concurrency gets allocated, the function will start scaling with the exact burst behavior like that of standard concurrency. After being allocated, provisioned concurrency will serve received requests with extremely weak latency. Upon letting all provisioned concurrency become utilized, the function will then scale up in a normal manner so as to handle whatever additional requests arrive.

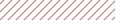

Application Auto Scaling: Offers some autoscaling for provisioned concurrency. Application Auto Scaling, allows users to create a target tracking scaling policy which may adjust provisioned concurrency levels in an automatic manner, according to the utilization metric emitted by Lambda.

Application Auto Scaling API: For registering an alias to be as a scalable target as well as creating a scaling policy.

The below shows a function scaling between a min and max value of provisioned concurrency according to utilization. As open requests start increasing, Application Auto Scaling will increase provisioned concurrency in huge steps up till it gets to the configured max. The function keeps on scaling on standard concurrency all the way till utilization begins dropping. As utilization remains low in a consistent manner, Application Auto Scaling starts decreasing provisioned concurrency in little periodic steps.

AWS Lambda Scaling – autoscaling with provisioned concurrency

Legend for Graph

Upon invoking your function asynchronously, through the use of an event source mapping or any other service offered by AWS, scaling behavior changes. As an example, an event source mapping which reads from a stream gets limited by the number of shards found in the stream. Scaling capacity which is unused by a specific event source becomes available for utilization by other event sources and clients.