AWS Lambda EventSourceMapping

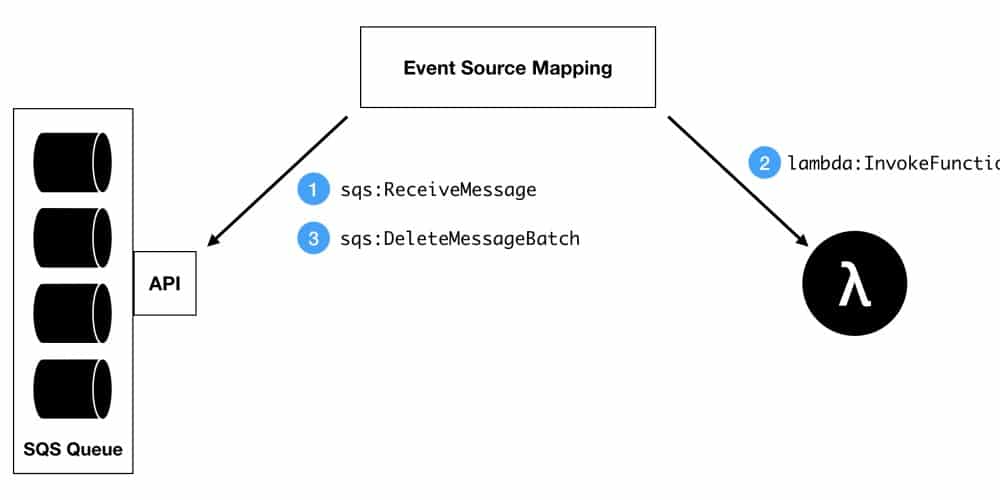

What is AWS Lambda EventSourceMapping

AWS Lambda EventSourceMapping

AWS::Lambda::EventSourceMapping resource:

It helps you to create a mapping between an event source + a Lambda function. Lambda will start reading the items from event source and then triggering this function.

Its Syntax

For declaring this entity into a CloudFormation template, you must rely on the below syntax:

– JSON

| { |

|---|

| "Type" : "AWS::Lambda::EventSourceMapping", |

| "Properties" : { |

| "BatchSize" : Integer, |

| "BisectBatchOnFunctionError" : Boolean, |

| "DestinationConfig" : DestinationConfig, |

| "Enabled" : Boolean, |

| "EventSourceArn" : String, |

| "FunctionName" : String, |

| "MaximumBatchingWindowInSeconds" : Integer, |

| "MaximumRecordAgeInSeconds" : Integer, |

| "MaximumRetryAttempts" : Integer, |

| "ParallelizationFactor" : Integer, |

| "StartingPosition" : String |

| } |

| } |

– YAML

Type: AWS::Lambda::EventSourceMapping

Properties:

BatchSize: Integer

BisectBatchOnFunctionError: Boolean

Enabled: Boolean

EventSourceArn: String

FunctionName: String

MaximumBatchingWindowInSeconds: Integer

MaximumRecordAgeInSeconds: Integer

MaximumRetryAttempts: Integer

ParallelizationFactor: Integer

StartingPosition: String

Its Properties:

BatchSize

This refers to the max number of items to be retrieved from a single batch.

– Amazon Kinesis: Default of 100. Max of 10,000.

– Amazon DynamoDB Streams: Default of 100. Max of 1,000.

– Amazon Simple Queue Service: Default of 10. Max of 10.

Required? No.

Type? Integer.

Minimum? 1.

Maximum? 10000.

Update requires? No interruption!

BisectBatchOnFunctionError

-Streams- In case your function sends back an error, you must split your batch in two parts and then retry.

Required? No.

Type? Boolean.

Update requires? No interruption!

DestinationConfig

-Streams- It is an SQS queue or an SNS topic destination intended for the discarded records.

Required? No.

Type? DestinationConfig.

Update requires? No interruption!

Enabled

This will disable the event source mapping in order to pause polling and invocation.

Required? No.

Type? Boolean.

Update requires? No interruption!

EventSourceArn

It is the Resource Name of your event source.

– Amazon Kinesis: ARN of data stream or stream consumer.

– Amazon DynamoDB Streams: ARN of stream.

– Amazon Simple Queue Service: ARN of queue.

Required? Yes.

Type? String.

Pattern? arn:(aws[a-zA-Z0-9-]*):([a-zA-Z0-9\-])+:([a-z]{2}(-gov)?-[a-z]+-\d{1})?:(\d{12})?:(.*)

Update requires? Replacement!

FunctionName

Name of your Lambda function.

Formats:

- Function name: MyFunction

- Function ARN: arn:aws:lambda:us-west-2:123456789012:function:MyFunction

- Version or Alias ARN: arn:aws:lambda:us-west-2:123456789012:function:MyFunction:PROD

- Partial ARN: 123456789012:function:MyFunction

Length constraint will simply apply to the whole ARN. In case of merely specifying the function name, it will be limited to 64 characters.

Required? Yes.

Type? String.

Minimum? 1.

Maximum? 140.

Pattern? (arn:(aws[a-zA-Z-]*)?:lambda:)?([a-z]{2}(-gov)?-[a-z]+-\d{1}:)?(\d{12}:)?(function:)?([a-zA-Z0-9-_]+)(:(\$LATEST|[a-zA-Z0-9-_]+))?

Update requires? No interruption!

MaximumBatchingWindowInSeconds

-Streams- Max amount of time for gathering records prior to invoking the function [seconds].

Required? No.

Type? Integer.

Minimum? 0.

Maximum? 300.

Update requires? No interruption!

MaximumRecordAgeInSeconds

-Streams- Max age of record which Lambda sends to the function to get it processed.

Required? No.

Type? Integer.

Minimum? 60.

Maximum? 604800.

Update requires? No interruption!

MaximumRetryAttempts

-Streams- Max number of times for retrying after a function returns with an error.

Required? No.

Type? Integer.

Minimum? 0.

Maximum? 10000.

Update requires? No interruption!

ParallelizationFactor

-Streams- Number of batches for processing from every single shard concurrently.

Required? No.

Type? Integer.

Minimum? 1.

Maximum? 10.

Update requires? No interruption!

StartingPosition

Position inside a stream where the reading will begin. It is needed for Amazon Kinesis and Amazon DynamoDB Streams sources.

– LATEST: Merely read the new records.

– TRIM_HORIZON: Every single available record gets processed.

Required? No.

Type? String.

Update requires? Replacement!

Values Returned

– Ref

As the logical ID of this resource gets passed to the intrinsic Ref function, Ref is going to return back the mapping’s ID.

Some Examples for Event Source Mapping

You can create an event source mapping for reading events from Amazon Kinesis and invoking a Lambda function in the exact template.

– JSON

"EventSourceMapping": {

"Type": "AWS::Lambda::EventSourceMapping",

"Properties": {

"EventSourceArn": {

"Fn::Join": [

"",

[

"arn:aws:kinesis:",

{

"Ref": "AWS::Region"

},

":",

{

"Ref": "AWS::AccountId"

},

":stream/",

{

"Ref": "KinesisStream"

}

]

]

},

"FunctionName": {

"Fn::GetAtt": [

"LambdaFunction",

"Arn"

]

},

"StartingPosition": "TRIM_HORIZON"

}

}

- YAML

MyEventSourceMapping:

Type: AWS::Lambda::EventSourceMapping

Properties:

EventSourceArn:

Fn::Join:

- ""

-

- "arn:aws:kinesis:"

-

Ref: "AWS::Region"

- ":"

-

Ref: "AWS::AccountId"

- ":stream/"

-

Ref: "KinesisStream"

FunctionName:

Fn::GetAtt:

- "LambdaFunction"

- "Arn"

StartingPosition: "TRIM_HORIZON"

How to Configure a Stream as an Event Source?

For configuring your function to be able to start reading from DynamoDB Streams in the Lambda console, you will need to create a DynamoDB trigger.

Creating a trigger

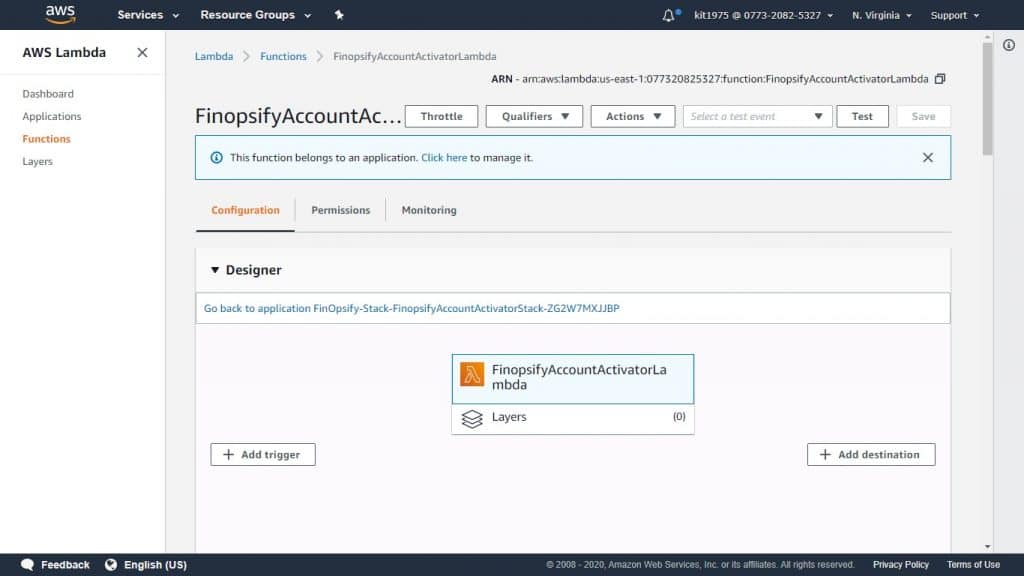

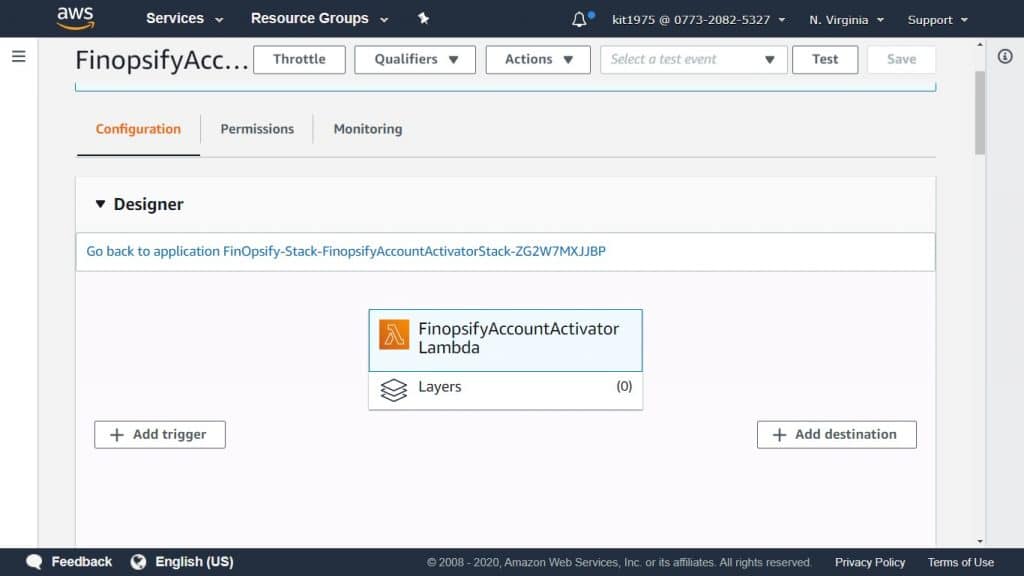

- Go to the Lambda console Functions page

AWS Lambda EventSourceMapping – functions page

- Select a function.

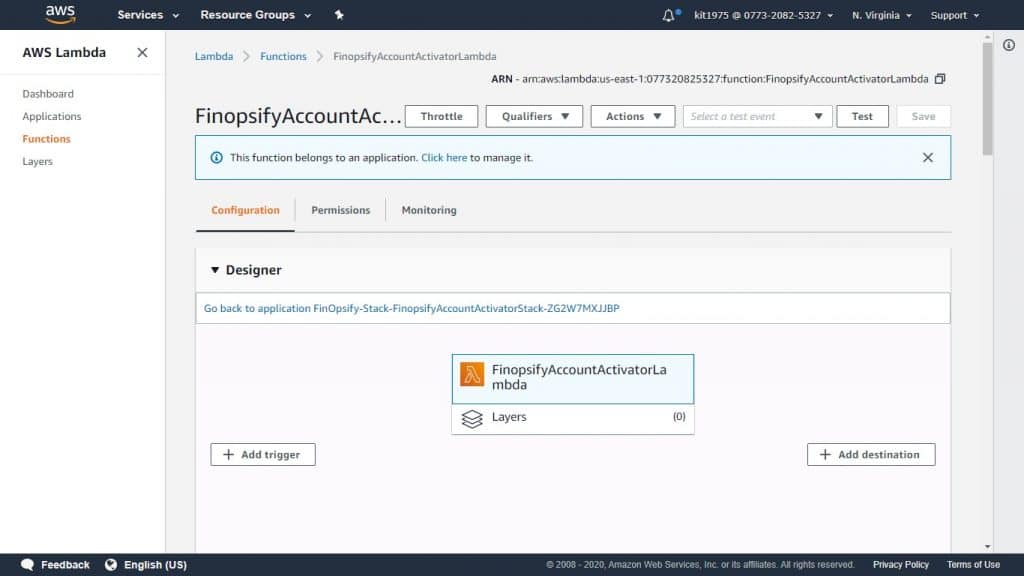

- Below Designer, click on Add trigger.

AWS Lambda EventSourceMapping – functions page

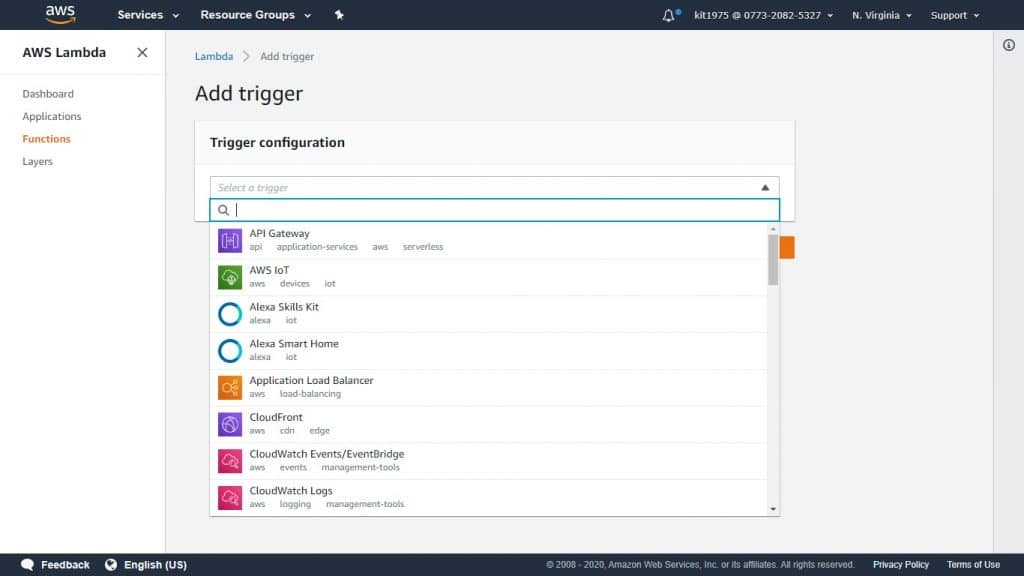

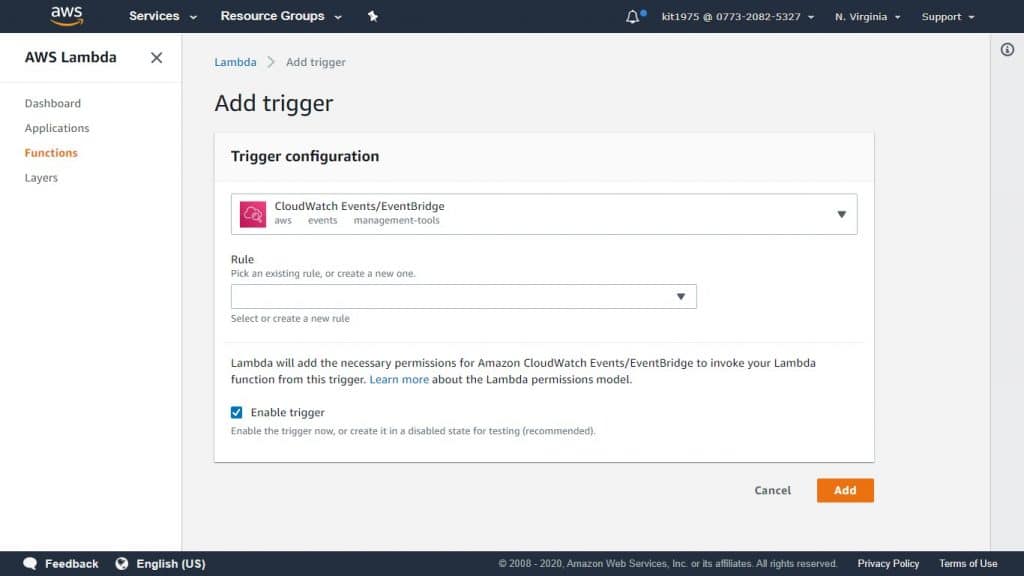

- Select a trigger type.

AWS Lambda EventSourceMapping – trigger type

- Get the necessary options configured then click on Add.

AWS Lambda EventSourceMapping – click add

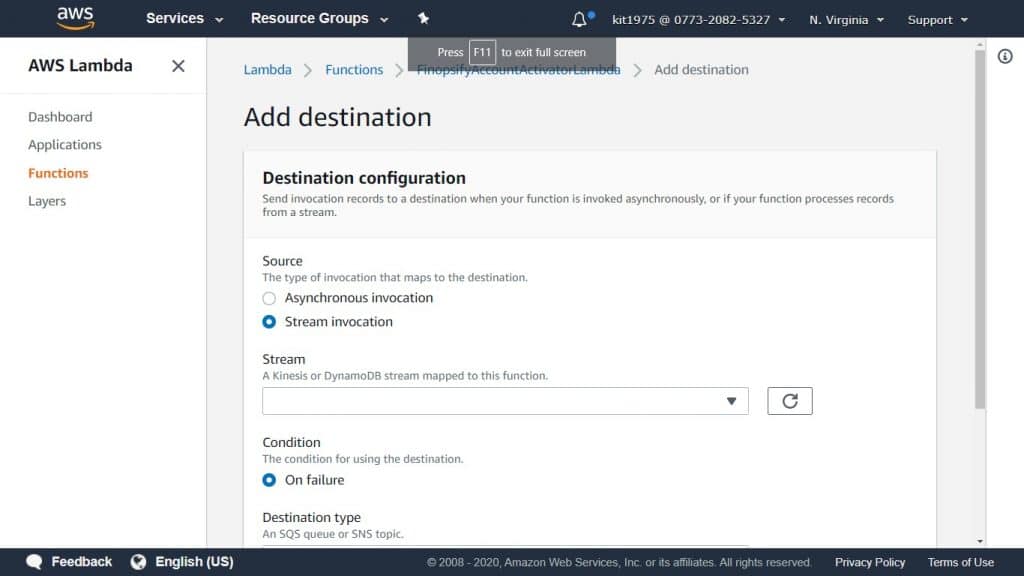

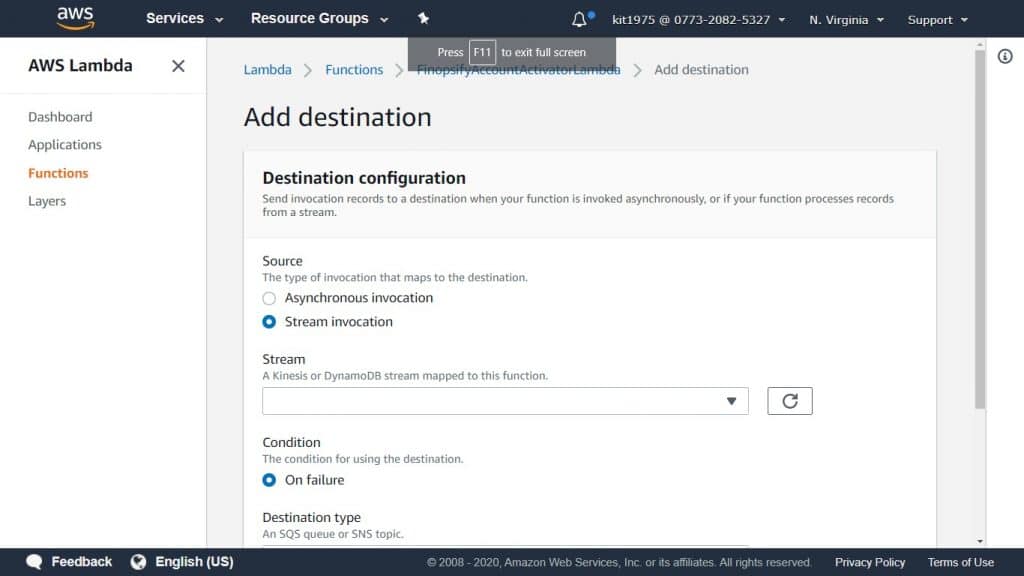

How To configure a destination for failed-event records?

- Go to the Lambda console Functions page.

- Select a function.

- Below Designer, click on Add destination.

AWS Lambda EventSourceMapping – add destination

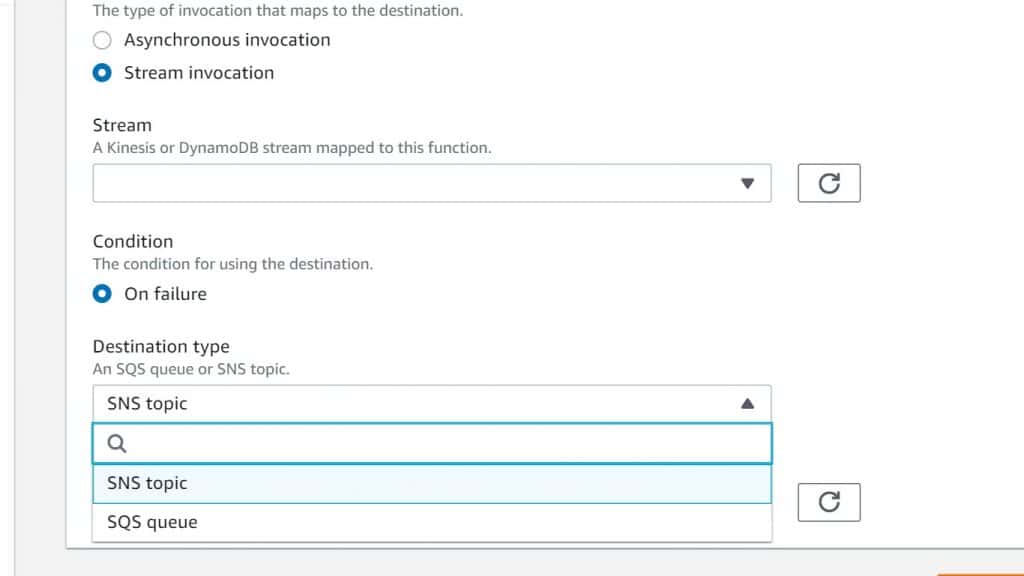

- As a Source, select Stream invocation.

AWS Lambda EventSourceMapping – stream invocation

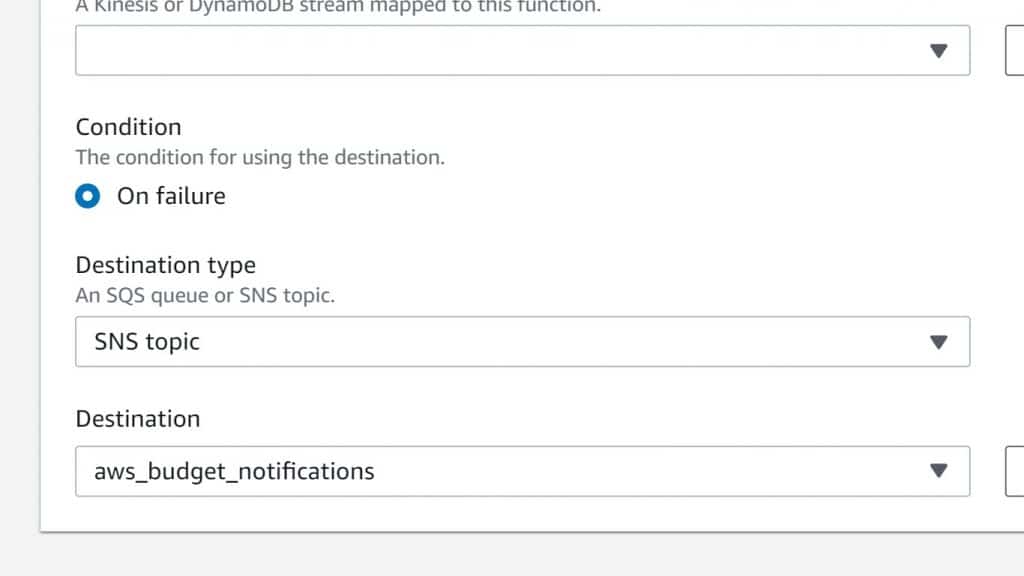

- As a Stream, select a stream mapped to your function.

AWS Lambda EventSourceMapping – map stream

- As a Destination type, select the type of resource receiving the invocation record.

AWS Lambda EventSourceMapping – select type of resource

- As a Destination, select a resource.

AWS Lambda EventSourceMapping – select a resource

- Click on Save.

The below mentioned example narrates an invocation record for a DynamoDB stream.

Invocation Record

| { |

| “requestContext”: { |

| “requestId”: “316aa6d0-8154-xmpl-9af7-85d5f4a6bc81”, |

| “functionArn”: “arn:aws:lambda:us-east-2:123456789012:function:myfunction”, |

| “condition”: “RetryAttemptsExhausted”, |

| “approximateInvokeCount”: 1 |

| }, |

| “responseContext”: { |

| “statusCode”: 200, |

| “executedVersion”: “$LATEST”, |

| “functionError”: “Unhandled” |

| }, |

| “version”: “1.0”, |

| “timestamp”: “2019-11-14T00:13:49.717Z”, |

| “DDBStreamBatchInfo”: { |

| “shardId”: “shardId-00000001573689847184-864758bb”, |

| “startSequenceNumber”: “800000000003126276362”, |

| “endSequenceNumber”: “800000000003126276362”, |

| “approximateArrivalOfFirstRecord”: “2019-11-14T00:13:19Z”, |

| “approximateArrivalOfLastRecord”: “2019-11-14T00:13:19Z”, |

| “batchSize”: 1, |

| “streamArn”: “arn:aws:dynamodb:us-east-2:123456789012:table/mytable/stream/2019-11-14T00:04:06.388” |

| } |

| } |

This information may be used for retrieving affected records from the stream to get them troubleshooted. Actual records will not be included, which means you’re going to have to process this record and get them from the stream before they get lost due to expiration.